In my last post I covered setup for Burp Suite, as well as the Proxy and Target tabs.

This blog post will cover the Spider, Intruder and Repeater tools, which start to show the usefulness and power of Burp Suite. Since everything is more fun with examples, I'll be using practice hacking sites to demo some of these features. : )

If you don't have Burp Suite set up yet, check out this blog post first.

Spider

First up is the Spider tool, which is a web crawler. Burp's website states:

Description Burp Suite is a reliable and practical platform that provides you with a simple means of performing security testing of web applications. It gives you full control, letting you combine advanced manual techniques with various tools that seamlessly work together to support the entire testing process. Burp Suite Tutorial. One tool that can be used for all sorts of penetration testing, either it be using it to manipulate the packets to buy stuff for free or to carry out a massive dictionary attack to uncover a huge data breach. All of this is just the beginning of what will eventually lead to a mastery of Burp Suite, so now is a good time to be excited. Finding a Target The best and easiest way to get started with practicing web hacking (that I have found) is to use attackdefense.com. Burp Suite Package Description. Burp Suite is an integrated platform for performing security testing of web applications. Its various tools work seamlessly together to support the entire testing process, from initial mapping and analysis of an application's attack surface, through to. After discussing Burp Suite setup, and the Proxy and Target tools in the last blog post, this post discussed the Spider, Repeater and Intruder tools. Spider is used to more thoroughly map out a site, Repeater is used for manually tampering and replaying requests, and Intruder is used to automate a large number of requests with parameterized values.

Burp's cutting-edge web application crawler accurately maps content and functionality, automatically handling sessions, state changes, volatile content, and application logins.

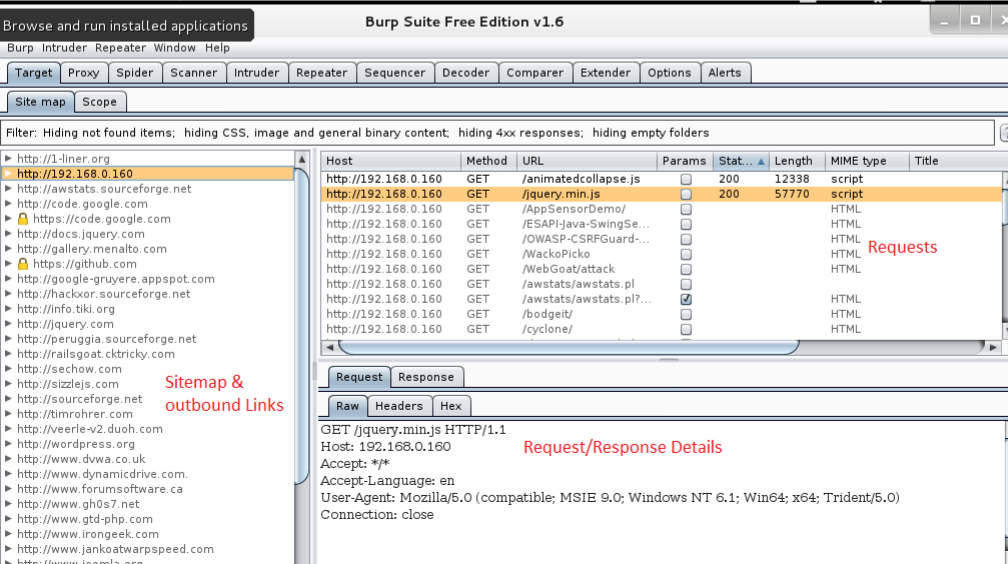

In other words, it programmatically crawls a website(s) for all links and adds them to the Site Map view in the Target tab. If you worked through the last post and its examples, then you have already (passively) used the Spider tool.

Why is this useful? Having a complete site map helps you understand the layout of a website and makes you aware of all the different areas where vulnerabilities might exist (for example, seeing the gear icon on a page means that data can be / has been submitted). Doing that by browsing through the website is time-consuming, especially if you have a very complex website.

The Spider tool does all of that for you by recursively finding and requesting all links on a given website.

Make sure you set your scope before you run the Spider tool!

We covered scope in the last blog post, but it's a way of limiting what websites are shown to you within Burp, and what websites are used by other tools (which sites do you want to be sending requests to?)

Configuring Scope

In this example, I'll be using XSS Game first. First, I turn FoxyProxy on in my browser, and make sure that the settings in the Proxy > Options tab match my FoxyProxy options.

Next, I go to the Target > Scope tab to set my scope. I add a new scope and type 'xss-game'. If you do not set a scope when spidering, it will crawl things outside of your intended target. Depending on what those sites are, that might be bad. 🙃

If you go to Spider > Control, you can see that the scope defaults to 'Use suite scope', which is the scope we just defined. You can also set a custom scope if needed, which will function separate from the scope applied to other tools.

Start Spidering

To start spidering, you have a few different options. As we saw in the last blog post, you can right-click a request from numerous places (Proxy > HTTP History, Proxy > Intercept, Target > Site Map, etc.) and send the request to other tools.

In the Target > Site Map view, you can see that I've already visited one site from XSS Game (I visited the splash page).

Burp Suite 2020 Spiderman

Right-click this and select 'Spider this branch'. In other views, you can right-click a request and say 'Spider from here'.

When I do this, I can see that the Spider tab has lit up orange.

If I want to see how many requests are being made, or if I need to stop the tool for some reason (maybe things are getting recursively crazy), go to Spider > Control.

If the 'Spider is running' button is grey/depressed, that means it's currently running. You can press the button to stop it, and then clear any upcoming queues if need be.

Here are the results:

In this case, the results aren't that impressive. We probably could have found most of those by just browsing. But, hopefully it's clear how this would be useful for much larger websites.

Form Submissions and Other Options

I also ran the Spider tool on a local copy of OWASP's WebGoat tool (which meant that I had to add localhost to my scope before Spidering). WebGoat is an intentionally vulnerable web app used to teach various attacks, and includes two different login accounts.

When I started running the Spider tool, I saw this pop-up in Burp:

I already knew the login (it was provided), so I typed 'guest' and 'guest' into the username and password fields. But then the form submission pop-up appeared again. If this was a bigger application, then this would get very annoying very quickly.

If we got to the Spider > Options tab, and scroll down, we see that there's automated responses that we can choose for a login form:

It defaults to 'prompt for guidance' but we could change the settings with our known credentials.

If you scroll up or down on the Spider > Options tab, you'll see that there are automated responses for other forms as well. Be sure to look this over and either modify the field values, turn automated form submission off, etc.

The Options tab is also where you can turn off 'passive spidering' (where Burp adds information to your Site Map as you browse). Max link depth and parameterized requests per URL can also be configured on this page.

Recap

The Spider tool is a web crawler that recursively requests every link it finds, and adds it to the Site Map. Before you use it, it is important to set the scope (Target tab) and also define the Spider's behavior when it encounters logins or other forms.

Repeater

The Proxy tool lets you intercept requests, and the Site Map and Spider tools help show the breadth and depth of a target. But finding malicious payloads (or CTF flags) happens at the single-request level.

The Repeater tool is a manual tampering tool that lets you replay individual requests and modify them. This is often called 'manual' testing.

I'll be showing the Repeater tool on the XSS Game website (I'm doing this in Firefox; Chrome has a XSS blocking feature).

In the Proxy > HTTP History or Target > Site Map view, right-click on a single request and select 'Send to Repeater'. The Repeater tab should light up orange. Here, I'm right-clicking on a /level1 request for XSS Game where I've sent a query ('hi').

This will show up in the Repeater view as a numbered tab (which you can rename).

If I click 'Go' it will send the request again, and I can see that the query string of 'hi' (once again) did not allow me to move to the next level of XSS Game.

Let's try this again and swap out 'hi' for 'alert(‘hi')'. I can do this by highlighting 'hi' and typing my new payload.

Then, I can click Go. I see in the output that my script tags are still intact, which means that my XSS attack might work. From here, I have two options. I can either:

- Copy/paste my payload into the website and do it manually, or

- Use Burp to automate a browser request.

Burp Suite 2020 Spider Challenge

I want to do the second option, so I right-click anywhere in the Response area, and say 'Request in browser' and select 'original session'.

This will pop-up a window with a temporary link. If you copy/paste this into your browser, then you will be redirected to the website with the payload you created in Burp.

Once again, yes, this is a simple example, but it simplifies a lot of the trial-and-error that might occur while testing out a page.

Better yet, you also get forward and back history buttons, so if you want to go back to a previous request you made, it has already been saved in your history, and it's easily accessible.

Additionally, the response payloads will likely be much bigger in a 'real' website. You can use the buttons at the bottom of the Response view to search for terms (i.e. 'Success!') matching strings or regexes.

Lastly, the responses can be viewed in a variety of ways. You can see the raw response, just the headers, the HTML, or the rendered page.

Recap

Repeater is a manual tampering tool that lets you copy requests from other tools (Proxy, Target, etc.) and modify them before sending them again to the target. The Repeater makes it easy to modify the payload, and also provides links so that you can quickly repeat the attack in the browser.

Intruder

The last tool covered in this post is the Intruder tool. Imagine if we wanted to login to an application but we didn't know the username or password. We could copy a login request over to the Repeater tool, and then manually select the username and password and replace it each time with some options from a list.

Of course, we'd have to do this hundreds or even thousands of times. If we want to automate a process like this, where we have a changing parameter and a known set of values that we want to try, then it's time to use the Intruder tool.

I'm going to use OWASP's WebGoat site for this example, since it has a login form. I have this running locally. I go to the login form on the site, and try a username/password combination (I know the correct combination but for this example, let's pretend that I don't know).

In the Proxy > HTTP History tab, I find the request that corresponds to my guess.

I right-click on the request view and select 'Send to Intruder'. I should see the Intruder tab light up orange, denoting that there's new activity in that tool.

Target Settings

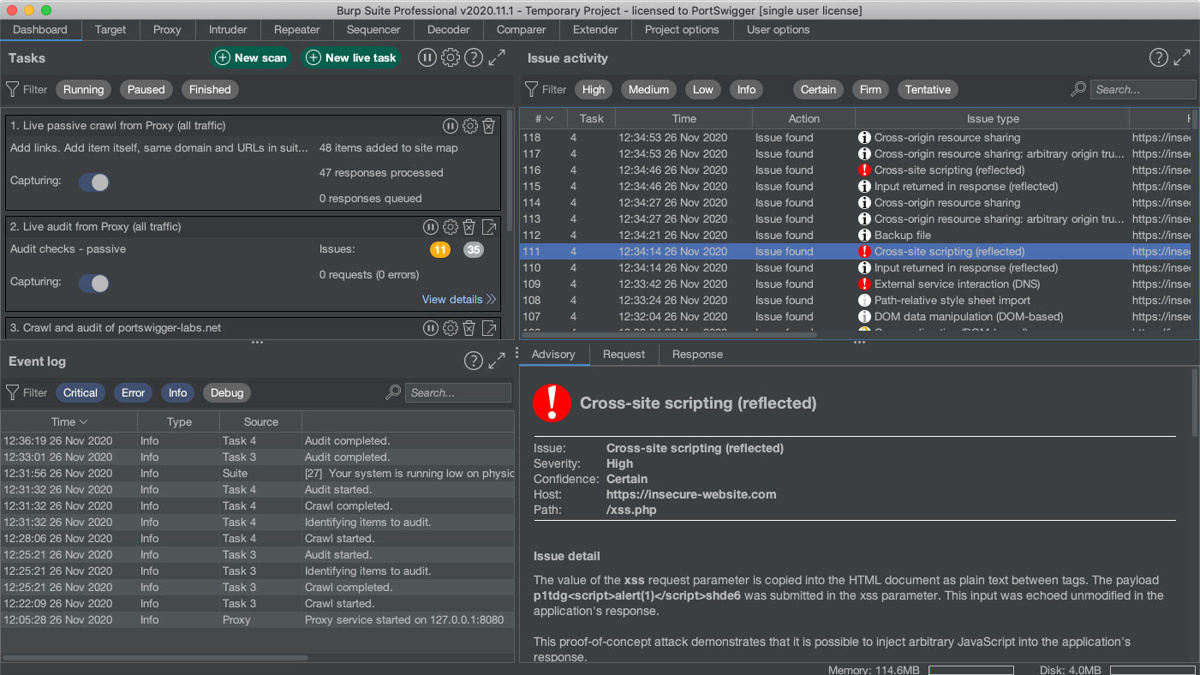

If we click over to the Intruder tab, we see this. **It's a very good idea to double-check these values each time, as the Intruder tool is going to send a LOT of requests to your target. ** Make sure it's correct so you're not sending these requests to someone else!

Positions

Next, click on the Positions tab. Burp Suite has helpfully identified what it thinks are values that we want to parameterize. In this case, the session ID, the username and the password.

If we want to set this ourselves, we can click 'Clear'. Then, highlight the value you want to parameterize and click Add. This will add squigglies around the word. Parameterizing values means that we can programmatically change the value in our requests.

In this example, I've parameterized the username and password values. Then, I selected 'Clusterbomb' as the attack type. This means that it will try every username and password combination that I give it (factorial options).

Payload

Next, click the Payload tab. Since we have two payloads (username and password), we will have to set each one individually. You can select one at a time from the first section:

We'll use 'Simple list' as the payload type for this, but there are many other options, like 'numbers' which could be used to find IDs or change a value in a longer string of characters.

If you have the Pro version, then you can use pre-defined lists in Burp. If you are using the free version, you can either load in a list (i.e. 'Rock you' for passwords, etc.) or create your own list. For this example, I will make my own list of 4 possible usernames by typing them in and clicking add. Since Payload Set '1' was selected in the Payload Sets section, this applies to my first parameter, which is username.

Next, I have to set the Payload Set to '2' and make some possible passwords.

Now I can see that I've got a request count of 12, which makes sense. I've got 4 usernames and 3 passwords. If I try every combination (since I set my attack type to 'Clusterbomb'), then I will have 12 requests.

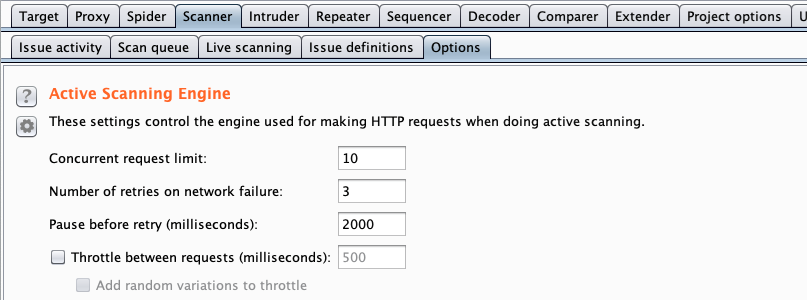

Next, I click 'Start Attack' in the Payload Sets options. If you have the free version, your attacks will be throttled, so big lists will take a long time. 12 requests should go pretty quickly, though.

I'll see a pop-up window that lists all the attacks. In 'real' attacks, this would be much longer, so I can use the Grep – Match tool in Intruder > Options, or just sort by HTTP status code or response length to find the interesting responses.

In this case, it's obvious since we have such a short list. The last combination, which is 'guest' / 'guest', returns a much longer response than the other attempts. This is the correct set of credentials (the added response length is from the login cookie we received).

Options

As with the other tools, the Options tab is worth checking out. You can limit the number of threads/retries/etc. You can also use the Grep sections to sort through your attack results easier.

Recap

The Intruder tool automates requests when we have positions whose values we want to swap out, and we have a set of known values for those positions. We can configure the attack with user-, list- or Burp-defined values for each position, and use grep and other tools to sort through the results.

Summary

After discussing Burp Suite setup, and the Proxy and Target tools in the last blog post, this post discussed the Spider, Repeater and Intruder tools. Spider is used to more thoroughly map out a site, Repeater is used for manually tampering and replaying requests, and Intruder is used to automate a large number of requests with parameterized values.

In my last post I covered setup for Burp Suite, as well as the Proxy and Target tabs.

This blog post will cover the Spider, Intruder and Repeater tools, which start to show the usefulness and power of Burp Suite. Since everything is more fun with examples, I'll be using practice hacking sites to demo some of these features. : )

If you don't have Burp Suite set up yet, check out this blog post first.

Spider

First up is the Spider tool, which is a web crawler. Burp's website states:

Burp's cutting-edge web application crawler accurately maps content and functionality, automatically handling sessions, state changes, volatile content, and application logins.

In other words, it programmatically crawls a website(s) for all links and adds them to the Site Map view in the Target tab. If you worked through the last post and its examples, then you have already (passively) used the Spider tool.

Why is this useful? Having a complete site map helps you understand the layout of a website and makes you aware of all the different areas where vulnerabilities might exist (for example, seeing the gear icon on a page means that data can be / has been submitted). Doing that by browsing through the website is time-consuming, especially if you have a very complex website.

The Spider tool does all of that for you by recursively finding and requesting all links on a given website.

Make sure you set your scope before you run the Spider tool!

We covered scope in the last blog post, but it's a way of limiting what websites are shown to you within Burp, and what websites are used by other tools (which sites do you want to be sending requests to?)

Configuring Scope

In this example, I'll be using XSS Game first. First, I turn FoxyProxy on in my browser, and make sure that the settings in the Proxy > Options tab match my FoxyProxy options.

Next, I go to the Target > Scope tab to set my scope. I add a new scope and type 'xss-game'. If you do not set a scope when spidering, it will crawl things outside of your intended target. Depending on what those sites are, that might be bad. 🙃

If you go to Spider > Control, you can see that the scope defaults to 'Use suite scope', which is the scope we just defined. You can also set a custom scope if needed, which will function separate from the scope applied to other tools.

Start Spidering

To start spidering, you have a few different options. As we saw in the last blog post, you can right-click a request from numerous places (Proxy > HTTP History, Proxy > Intercept, Target > Site Map, etc.) and send the request to other tools.

In the Target > Site Map view, you can see that I've already visited one site from XSS Game (I visited the splash page).

Right-click this and select 'Spider this branch'. In other views, you can right-click a request and say 'Spider from here'.

When I do this, I can see that the Spider tab has lit up orange.

If I want to see how many requests are being made, or if I need to stop the tool for some reason (maybe things are getting recursively crazy), go to Spider > Control.

If the 'Spider is running' button is grey/depressed, that means it's currently running. You can press the button to stop it, and then clear any upcoming queues if need be.

Here are the results:

In this case, the results aren't that impressive. We probably could have found most of those by just browsing. But, hopefully it's clear how this would be useful for much larger websites.

Form Submissions and Other Options

I also ran the Spider tool on a local copy of OWASP's WebGoat tool (which meant that I had to add localhost to my scope before Spidering). WebGoat is an intentionally vulnerable web app used to teach various attacks, and includes two different login accounts.

When I started running the Spider tool, I saw this pop-up in Burp:

I already knew the login (it was provided), so I typed 'guest' and 'guest' into the username and password fields. But then the form submission pop-up appeared again. If this was a bigger application, then this would get very annoying very quickly.

If we got to the Spider > Options tab, and scroll down, we see that there's automated responses that we can choose for a login form:

It defaults to 'prompt for guidance' but we could change the settings with our known credentials.

If you scroll up or down on the Spider > Options tab, you'll see that there are automated responses for other forms as well. Be sure to look this over and either modify the field values, turn automated form submission off, etc.

The Options tab is also where you can turn off 'passive spidering' (where Burp adds information to your Site Map as you browse). Max link depth and parameterized requests per URL can also be configured on this page.

Recap

The Spider tool is a web crawler that recursively requests every link it finds, and adds it to the Site Map. Before you use it, it is important to set the scope (Target tab) and also define the Spider's behavior when it encounters logins or other forms.

Repeater

The Proxy tool lets you intercept requests, and the Site Map and Spider tools help show the breadth and depth of a target. But finding malicious payloads (or CTF flags) happens at the single-request level.

The Repeater tool is a manual tampering tool that lets you replay individual requests and modify them. This is often called 'manual' testing.

I'll be showing the Repeater tool on the XSS Game website (I'm doing this in Firefox; Chrome has a XSS blocking feature).

In the Proxy > HTTP History or Target > Site Map view, right-click on a single request and select 'Send to Repeater'. The Repeater tab should light up orange. Here, I'm right-clicking on a /level1 request for XSS Game where I've sent a query ('hi').

This will show up in the Repeater view as a numbered tab (which you can rename).

If I click 'Go' it will send the request again, and I can see that the query string of 'hi' (once again) did not allow me to move to the next level of XSS Game.

Let's try this again and swap out 'hi' for 'alert(‘hi')'. I can do this by highlighting 'hi' and typing my new payload.

Then, I can click Go. I see in the output that my script tags are still intact, which means that my XSS attack might work. From here, I have two options. I can either:

- Copy/paste my payload into the website and do it manually, or

- Use Burp to automate a browser request.

I also ran the Spider tool on a local copy of OWASP's WebGoat tool (which meant that I had to add localhost to my scope before Spidering). WebGoat is an intentionally vulnerable web app used to teach various attacks, and includes two different login accounts.

When I started running the Spider tool, I saw this pop-up in Burp:

I already knew the login (it was provided), so I typed 'guest' and 'guest' into the username and password fields. But then the form submission pop-up appeared again. If this was a bigger application, then this would get very annoying very quickly.

If we got to the Spider > Options tab, and scroll down, we see that there's automated responses that we can choose for a login form:

It defaults to 'prompt for guidance' but we could change the settings with our known credentials.

If you scroll up or down on the Spider > Options tab, you'll see that there are automated responses for other forms as well. Be sure to look this over and either modify the field values, turn automated form submission off, etc.

The Options tab is also where you can turn off 'passive spidering' (where Burp adds information to your Site Map as you browse). Max link depth and parameterized requests per URL can also be configured on this page.

Recap

The Spider tool is a web crawler that recursively requests every link it finds, and adds it to the Site Map. Before you use it, it is important to set the scope (Target tab) and also define the Spider's behavior when it encounters logins or other forms.

Repeater

The Proxy tool lets you intercept requests, and the Site Map and Spider tools help show the breadth and depth of a target. But finding malicious payloads (or CTF flags) happens at the single-request level.

The Repeater tool is a manual tampering tool that lets you replay individual requests and modify them. This is often called 'manual' testing.

I'll be showing the Repeater tool on the XSS Game website (I'm doing this in Firefox; Chrome has a XSS blocking feature).

In the Proxy > HTTP History or Target > Site Map view, right-click on a single request and select 'Send to Repeater'. The Repeater tab should light up orange. Here, I'm right-clicking on a /level1 request for XSS Game where I've sent a query ('hi').

This will show up in the Repeater view as a numbered tab (which you can rename).

If I click 'Go' it will send the request again, and I can see that the query string of 'hi' (once again) did not allow me to move to the next level of XSS Game.

Let's try this again and swap out 'hi' for 'alert(‘hi')'. I can do this by highlighting 'hi' and typing my new payload.

Then, I can click Go. I see in the output that my script tags are still intact, which means that my XSS attack might work. From here, I have two options. I can either:

- Copy/paste my payload into the website and do it manually, or

- Use Burp to automate a browser request.

Burp Suite 2020 Spider Challenge

I want to do the second option, so I right-click anywhere in the Response area, and say 'Request in browser' and select 'original session'.

This will pop-up a window with a temporary link. If you copy/paste this into your browser, then you will be redirected to the website with the payload you created in Burp.

Once again, yes, this is a simple example, but it simplifies a lot of the trial-and-error that might occur while testing out a page.

Better yet, you also get forward and back history buttons, so if you want to go back to a previous request you made, it has already been saved in your history, and it's easily accessible.

Additionally, the response payloads will likely be much bigger in a 'real' website. You can use the buttons at the bottom of the Response view to search for terms (i.e. 'Success!') matching strings or regexes.

Lastly, the responses can be viewed in a variety of ways. You can see the raw response, just the headers, the HTML, or the rendered page.

Recap

Repeater is a manual tampering tool that lets you copy requests from other tools (Proxy, Target, etc.) and modify them before sending them again to the target. The Repeater makes it easy to modify the payload, and also provides links so that you can quickly repeat the attack in the browser.

Intruder

The last tool covered in this post is the Intruder tool. Imagine if we wanted to login to an application but we didn't know the username or password. We could copy a login request over to the Repeater tool, and then manually select the username and password and replace it each time with some options from a list.

Of course, we'd have to do this hundreds or even thousands of times. If we want to automate a process like this, where we have a changing parameter and a known set of values that we want to try, then it's time to use the Intruder tool.

I'm going to use OWASP's WebGoat site for this example, since it has a login form. I have this running locally. I go to the login form on the site, and try a username/password combination (I know the correct combination but for this example, let's pretend that I don't know).

In the Proxy > HTTP History tab, I find the request that corresponds to my guess.

I right-click on the request view and select 'Send to Intruder'. I should see the Intruder tab light up orange, denoting that there's new activity in that tool.

Target Settings

If we click over to the Intruder tab, we see this. **It's a very good idea to double-check these values each time, as the Intruder tool is going to send a LOT of requests to your target. ** Make sure it's correct so you're not sending these requests to someone else!

Positions

Next, click on the Positions tab. Burp Suite has helpfully identified what it thinks are values that we want to parameterize. In this case, the session ID, the username and the password.

If we want to set this ourselves, we can click 'Clear'. Then, highlight the value you want to parameterize and click Add. This will add squigglies around the word. Parameterizing values means that we can programmatically change the value in our requests.

In this example, I've parameterized the username and password values. Then, I selected 'Clusterbomb' as the attack type. This means that it will try every username and password combination that I give it (factorial options).

Payload

Next, click the Payload tab. Since we have two payloads (username and password), we will have to set each one individually. You can select one at a time from the first section:

We'll use 'Simple list' as the payload type for this, but there are many other options, like 'numbers' which could be used to find IDs or change a value in a longer string of characters.

If you have the Pro version, then you can use pre-defined lists in Burp. If you are using the free version, you can either load in a list (i.e. 'Rock you' for passwords, etc.) or create your own list. For this example, I will make my own list of 4 possible usernames by typing them in and clicking add. Since Payload Set '1' was selected in the Payload Sets section, this applies to my first parameter, which is username.

Next, I have to set the Payload Set to '2' and make some possible passwords.

Now I can see that I've got a request count of 12, which makes sense. I've got 4 usernames and 3 passwords. If I try every combination (since I set my attack type to 'Clusterbomb'), then I will have 12 requests.

Next, I click 'Start Attack' in the Payload Sets options. If you have the free version, your attacks will be throttled, so big lists will take a long time. 12 requests should go pretty quickly, though.

I'll see a pop-up window that lists all the attacks. In 'real' attacks, this would be much longer, so I can use the Grep – Match tool in Intruder > Options, or just sort by HTTP status code or response length to find the interesting responses.

In this case, it's obvious since we have such a short list. The last combination, which is 'guest' / 'guest', returns a much longer response than the other attempts. This is the correct set of credentials (the added response length is from the login cookie we received).

Options

As with the other tools, the Options tab is worth checking out. You can limit the number of threads/retries/etc. You can also use the Grep sections to sort through your attack results easier.

Recap

The Intruder tool automates requests when we have positions whose values we want to swap out, and we have a set of known values for those positions. We can configure the attack with user-, list- or Burp-defined values for each position, and use grep and other tools to sort through the results.

Summary

After discussing Burp Suite setup, and the Proxy and Target tools in the last blog post, this post discussed the Spider, Repeater and Intruder tools. Spider is used to more thoroughly map out a site, Repeater is used for manually tampering and replaying requests, and Intruder is used to automate a large number of requests with parameterized values.

In my last post I covered setup for Burp Suite, as well as the Proxy and Target tabs.

This blog post will cover the Spider, Intruder and Repeater tools, which start to show the usefulness and power of Burp Suite. Since everything is more fun with examples, I'll be using practice hacking sites to demo some of these features. : )

If you don't have Burp Suite set up yet, check out this blog post first.

Spider

First up is the Spider tool, which is a web crawler. Burp's website states:

Burp's cutting-edge web application crawler accurately maps content and functionality, automatically handling sessions, state changes, volatile content, and application logins.

In other words, it programmatically crawls a website(s) for all links and adds them to the Site Map view in the Target tab. If you worked through the last post and its examples, then you have already (passively) used the Spider tool.

Why is this useful? Having a complete site map helps you understand the layout of a website and makes you aware of all the different areas where vulnerabilities might exist (for example, seeing the gear icon on a page means that data can be / has been submitted). Doing that by browsing through the website is time-consuming, especially if you have a very complex website.

The Spider tool does all of that for you by recursively finding and requesting all links on a given website.

Make sure you set your scope before you run the Spider tool!

We covered scope in the last blog post, but it's a way of limiting what websites are shown to you within Burp, and what websites are used by other tools (which sites do you want to be sending requests to?)

Configuring Scope

In this example, I'll be using XSS Game first. First, I turn FoxyProxy on in my browser, and make sure that the settings in the Proxy > Options tab match my FoxyProxy options.

Next, I go to the Target > Scope tab to set my scope. I add a new scope and type 'xss-game'. If you do not set a scope when spidering, it will crawl things outside of your intended target. Depending on what those sites are, that might be bad. 🙃

If you go to Spider > Control, you can see that the scope defaults to 'Use suite scope', which is the scope we just defined. You can also set a custom scope if needed, which will function separate from the scope applied to other tools.

Start Spidering

To start spidering, you have a few different options. As we saw in the last blog post, you can right-click a request from numerous places (Proxy > HTTP History, Proxy > Intercept, Target > Site Map, etc.) and send the request to other tools.

In the Target > Site Map view, you can see that I've already visited one site from XSS Game (I visited the splash page).

Right-click this and select 'Spider this branch'. In other views, you can right-click a request and say 'Spider from here'.

When I do this, I can see that the Spider tab has lit up orange.

If I want to see how many requests are being made, or if I need to stop the tool for some reason (maybe things are getting recursively crazy), go to Spider > Control.

If the 'Spider is running' button is grey/depressed, that means it's currently running. You can press the button to stop it, and then clear any upcoming queues if need be.

Here are the results:

In this case, the results aren't that impressive. We probably could have found most of those by just browsing. But, hopefully it's clear how this would be useful for much larger websites.

Form Submissions and Other Options

I also ran the Spider tool on a local copy of OWASP's WebGoat tool (which meant that I had to add localhost to my scope before Spidering). WebGoat is an intentionally vulnerable web app used to teach various attacks, and includes two different login accounts.

When I started running the Spider tool, I saw this pop-up in Burp:

I already knew the login (it was provided), so I typed 'guest' and 'guest' into the username and password fields. But then the form submission pop-up appeared again. If this was a bigger application, then this would get very annoying very quickly.

If we got to the Spider > Options tab, and scroll down, we see that there's automated responses that we can choose for a login form:

It defaults to 'prompt for guidance' but we could change the settings with our known credentials.

If you scroll up or down on the Spider > Options tab, you'll see that there are automated responses for other forms as well. Be sure to look this over and either modify the field values, turn automated form submission off, etc.

The Options tab is also where you can turn off 'passive spidering' (where Burp adds information to your Site Map as you browse). Max link depth and parameterized requests per URL can also be configured on this page.

Recap

The Spider tool is a web crawler that recursively requests every link it finds, and adds it to the Site Map. Before you use it, it is important to set the scope (Target tab) and also define the Spider's behavior when it encounters logins or other forms.

Repeater

The Proxy tool lets you intercept requests, and the Site Map and Spider tools help show the breadth and depth of a target. But finding malicious payloads (or CTF flags) happens at the single-request level.

The Repeater tool is a manual tampering tool that lets you replay individual requests and modify them. This is often called 'manual' testing.

I'll be showing the Repeater tool on the XSS Game website (I'm doing this in Firefox; Chrome has a XSS blocking feature).

In the Proxy > HTTP History or Target > Site Map view, right-click on a single request and select 'Send to Repeater'. The Repeater tab should light up orange. Here, I'm right-clicking on a /level1 request for XSS Game where I've sent a query ('hi').

This will show up in the Repeater view as a numbered tab (which you can rename).

If I click 'Go' it will send the request again, and I can see that the query string of 'hi' (once again) did not allow me to move to the next level of XSS Game.

Let's try this again and swap out 'hi' for 'alert(‘hi')'. I can do this by highlighting 'hi' and typing my new payload.

Then, I can click Go. I see in the output that my script tags are still intact, which means that my XSS attack might work. From here, I have two options. I can either:

- Copy/paste my payload into the website and do it manually, or

- Use Burp to automate a browser request.

I want to do the second option, so I right-click anywhere in the Response area, and say 'Request in browser' and select 'original session'.

This will pop-up a window with a temporary link. If you copy/paste this into your browser, then you will be redirected to the website with the payload you created in Burp.

Once again, yes, this is a simple example, but it simplifies a lot of the trial-and-error that might occur while testing out a page.

Better yet, you also get forward and back history buttons, so if you want to go back to a previous request you made, it has already been saved in your history, and it's easily accessible.

Additionally, the response payloads will likely be much bigger in a 'real' website. You can use the buttons at the bottom of the Response view to search for terms (i.e. 'Success!') matching strings or regexes.

Lastly, the responses can be viewed in a variety of ways. You can see the raw response, just the headers, the HTML, or the rendered page.

Recap

Repeater is a manual tampering tool that lets you copy requests from other tools (Proxy, Target, etc.) and modify them before sending them again to the target. The Repeater makes it easy to modify the payload, and also provides links so that you can quickly repeat the attack in the browser.

Intruder

The last tool covered in this post is the Intruder tool. Imagine if we wanted to login to an application but we didn't know the username or password. We could copy a login request over to the Repeater tool, and then manually select the username and password and replace it each time with some options from a list.

Of course, we'd have to do this hundreds or even thousands of times. If we want to automate a process like this, where we have a changing parameter and a known set of values that we want to try, then it's time to use the Intruder tool.

I'm going to use OWASP's WebGoat site for this example, since it has a login form. I have this running locally. I go to the login form on the site, and try a username/password combination (I know the correct combination but for this example, let's pretend that I don't know).

In the Proxy > HTTP History tab, I find the request that corresponds to my guess.

I right-click on the request view and select 'Send to Intruder'. I should see the Intruder tab light up orange, denoting that there's new activity in that tool.

Target Settings

If we click over to the Intruder tab, we see this. **It's a very good idea to double-check these values each time, as the Intruder tool is going to send a LOT of requests to your target. ** Make sure it's correct so you're not sending these requests to someone else!

Positions

Next, click on the Positions tab. Burp Suite has helpfully identified what it thinks are values that we want to parameterize. In this case, the session ID, the username and the password.

If we want to set this ourselves, we can click 'Clear'. Then, highlight the value you want to parameterize and click Add. This will add squigglies around the word. Parameterizing values means that we can programmatically change the value in our requests.

In this example, I've parameterized the username and password values. Then, I selected 'Clusterbomb' as the attack type. This means that it will try every username and password combination that I give it (factorial options).

Payload

Next, click the Payload tab. Since we have two payloads (username and password), we will have to set each one individually. You can select one at a time from the first section:

We'll use 'Simple list' as the payload type for this, but there are many other options, like 'numbers' which could be used to find IDs or change a value in a longer string of characters.

If you have the Pro version, then you can use pre-defined lists in Burp. If you are using the free version, you can either load in a list (i.e. 'Rock you' for passwords, etc.) or create your own list. For this example, I will make my own list of 4 possible usernames by typing them in and clicking add. Since Payload Set '1' was selected in the Payload Sets section, this applies to my first parameter, which is username.

Next, I have to set the Payload Set to '2' and make some possible passwords.

Now I can see that I've got a request count of 12, which makes sense. I've got 4 usernames and 3 passwords. If I try every combination (since I set my attack type to 'Clusterbomb'), then I will have 12 requests.

Next, I click 'Start Attack' in the Payload Sets options. If you have the free version, your attacks will be throttled, so big lists will take a long time. 12 requests should go pretty quickly, though.

I'll see a pop-up window that lists all the attacks. In 'real' attacks, this would be much longer, so I can use the Grep – Match tool in Intruder > Options, or just sort by HTTP status code or response length to find the interesting responses.

In this case, it's obvious since we have such a short list. The last combination, which is 'guest' / 'guest', returns a much longer response than the other attempts. This is the correct set of credentials (the added response length is from the login cookie we received).

Options

As with the other tools, the Options tab is worth checking out. You can limit the number of threads/retries/etc. You can also use the Grep sections to sort through your attack results easier.

Recap

The Intruder tool automates requests when we have positions whose values we want to swap out, and we have a set of known values for those positions. We can configure the attack with user-, list- or Burp-defined values for each position, and use grep and other tools to sort through the results.

Summary

After discussing Burp Suite setup, and the Proxy and Target tools in the last blog post, this post discussed the Spider, Repeater and Intruder tools. Spider is used to more thoroughly map out a site, Repeater is used for manually tampering and replaying requests, and Intruder is used to automate a large number of requests with parameterized values.